Safari Books Online is awesome, many reasons, worth another blog post. For one thing you get to access the latest and greatest titles even early releases. You can conveniently monitor it here.

However going to this page every day is cumbersome and there don't seem to be an RSS feed. Hence it was time to set up a notification mailer that grabs the page, parses its content, checks the timestamp (less than 24 hours) and sends a nice html email. You can grab your copy / checkout the code at github.

I use the great BeautifulSoup plugin as discussed previously), including the html5lib. It's most convenient to run the script in a cron job. I use the -d (daily switch) to get all new titles in the last 24 hours, and use the -w (weekly) once a week to filter on topics that I am interested in. This last execution mode saves me time searching for these filters manually. You can also filter by publisher.

I never get to miss a book this way :)

==

Example of running it with the weekly flag and (-t) filters

# python safarinew.py -wt "Android,mobile,Java,python,hadoop, big data,security,machine learning,data mining,data science, mean stack,hacker,hacking,javascript,developer,code,coding" -e

==

CLI flags - usage:

# python safarinew.py -h Usage: safarinew.py -c : use cached html (for testing) -d : see what was added in the last day -e : send parsed HTML output to comma separated list of emails -h : print this help -p : filter on a comma separated list of publishers -r : refresh the cached html file -t : filter on a comma separated list of titles -w : see what was added the last week Note: * -d and -w are mutually exclusive * -t only works with -w (weekly report) * -c refreshes the cache file if older than 24 hours, use -r to forcefully refresh it

==

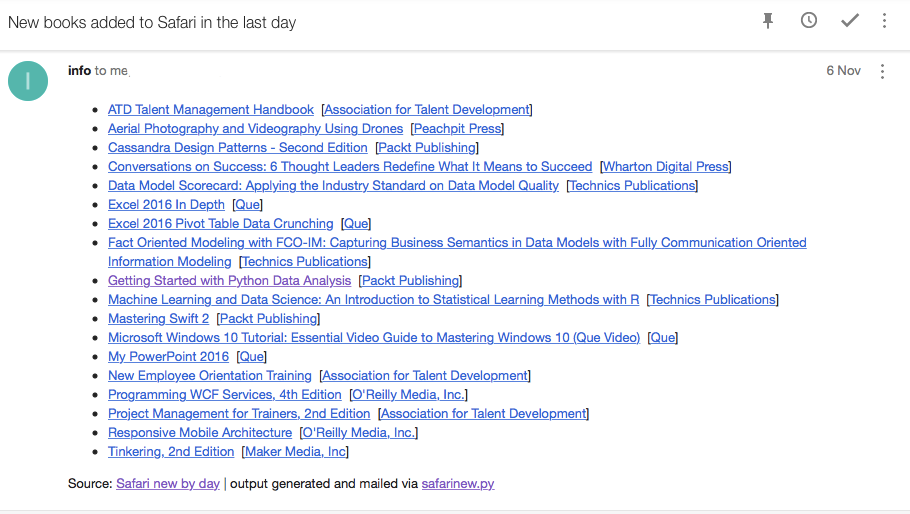

Example email:

==

Enjoy and let me know if you have additional use cases ...

==

Update 09/11/2015:

There is an RSS feed available. It seems richer in content, adding category and book cover and description, so I am definitely going to consume it and see if I can make the notification email information-richer ...

==

Update 10/11/2015:

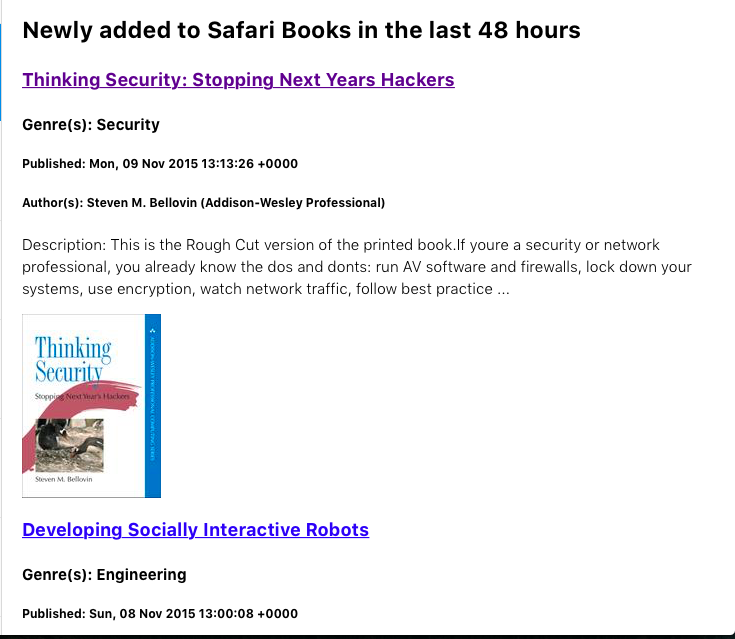

I made a new script to parse the mentioned RSS feed which stores each book entry in a Python shelve object, then prints (mails) an html output to a list of emails (in a text file). -d specifies how much time to look back, usage and run sample:

# python main.py -h Usage: main.py [options] Options: -h, --help show this help message and exit -d DAYS_BACK, --daysback=DAYS_BACK number of days to look back -m, --mail send html to recipients -t, --test use local xml for testing # python main.py -m -d 2 Downloading: https://www.safaribooksonline.com/feeds/recently-added.rss book not in shelve, shelving info for book id 9780134278223 book not in shelve, shelving info for book id 9781466697096 .. .. book not in shelve, shelving info for book id 9781119059578 book not in shelve, shelving info for book id 9780134275796 100 books processed .. html output .. .. mailing the output ..

- BeautifulSoup (3) ,

- books (16) ,

- html (7) ,

- parsing (2) ,

- python (11) ,

- safaribooks (1) ,

- webscraping (1)